Llama CPP is a new tool designed to run language models directly in C/C++. This tool is specially optimized for Apple Silicon processors through the use of ARM NEON technology and the Accelerate framework. It also offers AVX2 compatibility for systems based on x86 architectures. Operating mainly on the CPU, Llama CPP also integrates 4-bit quantization capability, further enhancing its efficiency. Its advantage is that it allows for the execution of a language model directly on a personal computer without requiring a GPU with a significant amount of VRAM. Simply put, there's no need to sell your car to test the latest trendy models. Let's be clear, even though the tool allows you to dive headfirst into the world of LLMs at a lower cost, you will need to arm yourself with patience. Processing times can sometimes be monstrous. Shall we test it together? You will see, it is not uncommon to wait 5 minutes to get a response. And that's okay, the idea here is to run the system on your workstation. You will understand why the stars of hosting ask for a few hundred/thousand euros to run your models in the cloud.

To date, here is the list of compatible models:

- LLaMA 🦙

- LLaMA 2 🦙🦙

- Falcon

- Alpaca

- GPT4All

- Chinese LLaMA / Alpaca and Chinese LLaMA-2 / Alpaca-2

- Vigogne (French)

- Vicuna

- Koala

- OpenBuddy 🐶 (Multilingual)

- Pygmalion/Metharme

- WizardLM

- Baichuan 1 & 2 + derivations

- Aquila 1 & 2

- Starcoder models

- Mistral AI v0.1

- Refact

- Persimmon 8B

- MPT

- Bloom

- StableLM-3b-4e1t

The first step, then, will be to install and compile Llama cpp. We'll assume that you are a developer, and like any good developer, you use Linux. Not the case? No worry, use Docker to simulate a machine running the same OS as this article, namely Ubuntu 22.04. You could use this docker-compose.yml as an example:

version: '3.8'

services:

ubuntu:

image: ubuntu:22.04

command: /bin/bash

stdin_open: true

tty: true

working_dir: /workspace

Docker compose up

Docker compose exec ubuntu bash

Are we there yet? Let's proceed. Prerequisites: install the necessary tools to run the program. You'll need compilation tools, but also tools that allow you to clone GitHub projects and a token giving you access to Huggingface, the famous repository of open-source LLMs.

apt-get update

apt-get install -y git cmake g++ make curl gh python3-pip

curl -fsSL https://cli.github.com/packages/githubcli-archive-keyring.gpg | sudo dd of=/usr/share/keyrings/githubcli-archive-keyring.gpg

sudo chmod go+r /usr/share/keyrings/githubcli-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/githubcli-archive-keyring.g

pg] https://cli.github.com/packages stable main" | sudo tee /etc/apt/sources.list.d/github-cli.list > /dev/null

pip install numpy

pip install sentencepiece

pip install -U "huggingface_hub[cli]"

To create a Hugging Face access token, here is the link (it can be a little tricky to find in the interface): https://huggingface.co/settings/tokens

If you don't yet have a token on GitHub, here is also a link: https://github.com/settings/tokens/new

Now we will clone and compile llama.cpp.

# Cloner le dépôt Git de llama-cpp

gh repo clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

# Compiler le projet

mkdir build

cd build

cmake ..

cmake --build . --config Release

Now, we are going to download the latest model crafted by MistralAi. You will find the model on Huggingface here: https://huggingface.co/mistralai

For more clarity, you can directly add llama.cpp to your environment. This will allow us to focus on the commands without cluttering our console with paths that will not bring us anything. For example:

export PATH="/Data/Projets/Llm/src/Llama-cpp/llama.cpp/build/bin:$PATH"

Model Download: replace $HUGGINGFACE_TOKEN with your token.

mkdir /Data/Projets/Llm/Models/mistralai

huggingface-cli login --token $HUGGINGFACE_TOKEN

huggingface-cli download mistralai/Mistral-7B-v0.1 --local-dir /Data/Projets/Llm/Models/mistralai

Serve yourself a coffee; it's 15 gigabytes. Some large files will only be symbolic links to the cache directory. You can specify --cache-dir to write them to another partition if needed. Which could give us something like this:

huggingface-cli download mistralai/Mistral-7B-v0.1 --local-dir /Data/Projets/Llm/Models/mistralai --cache-dir /Data/Projets/Llm/Models/huggingface_cache/

Once we have downloaded our files in PyTorch format, we will need to proceed with a little conversion. We return to our llama.cpp directory and we run the following command:

python3 llama.cpp/convert.py /Data/Projets/Llm/Models/mistralai

--outfile /Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1.gguf

--outtype f16

What is this --outtype q8_0 parameter?? It's Quantization. Quantization is a process aimed at reducing the size and complexity of models. Imagine a sculptor who starts with a huge block of marble and carves it to create a statue. Quantization is a bit like removing pieces of the block to make it lighter and easier to move, while ensuring that the statue retains its beauty and form. In the context of LLMs, quantization involves simplifying the representation of the model's weights. Weights in an LLM are numerical values that determine how the model reacts to different inputs. By reducing the precision of these weights (for example, from 32-bit floating-point values to 8-bit values), we reduce the overall size of the model and the amount of computation necessary to run it. This has several benefits. First, it makes the model faster and less energy-intensive, which is crucial for applications on mobile devices or low-capacity servers. Second, it makes AI more accessible because it can run on a wider range of devices, including those without cutting-edge computing hardware. However, quantization must be carefully managed. Too much reduction in precision can lead to a loss of performance, where the model becomes less accurate or less reliable. It's all about finding the right balance between efficiency and performance.

- 2-Bit Quantization - q2_k: Uses Q4_K for the attention.vw and feed_forward.w2 tensors, and Q2_K for the others. This means certain key tensors have a slightly higher precision (4 bits) to maintain performance, whereas the rest are more compressed (2 bits).

-

3-Bit Quantization - q3_k_l: Employs Q5_K for attention.wv, attention.wo, and feed_forward.w2, and Q3_K for the others. This indicates a higher precision (5 bits) for some important tensors, while the others are at 3 bits.

- q3_k_m: Uses Q4_K for the same tensors as q3_k_l, but with a slightly lower precision (4 bits), and Q3_K for the others.

- q3_k_s: Applies Q3_K quantization to all tensors, offering uniformity but with less precision.

-

4-Bit Quantization - q4_0: Original 4-bit quantization method.

- q4_1: Offers higher precision than q4_0, but lower than q5_0, with faster inference than q5 models.

- q4_k_m: Uses Q6_K for half of the attention.wv and feed_forward.w2 tensors, and Q4_K for the others.

- q4_k_s: Applies Q4_K to all tensors.

-

5-Bit Quantization - q5_0: Provides higher precision but requires more resources and slows inference.

- q5_1: Offers even higher precision at the expense of increased resource use and slower inference.

- q5_k_m: Like q4_k_m, but with Q6_K for some tensors and Q5_K for the others.

- q5_k_s: Uses Q5_K for all tensors.

- 6-Bit Quantization - q6_k: Employs a Q8_K quantization for all tensors, offering relatively high precision.

- 8-Bit Quantization - q8_0: This method achieves a quantization nearly indistinguishable from the float16 format but requires a lot of resources and slows inference.

The precision of a quantization method, therefore, depends on the number of bits used to represent the model's weights.

- Most Precise: The q6_k (6-Bit Quantization) method, using Q8_K for all tensors, would likely be the most precise among our list. It uses 8 bits for each weight, allowing for a more detailed representation and closer to the original values. This translates into better preservation of the model's nuances and details but at the expense of greater resource use and potentially longer inference time.

- Least Precise: The q2_k (2-Bit Quantization) method would be the least precise. This method uses only 2 bits for the majority of the tensors, with some exceptions at 4 bits (Q4_K) for the attention.vw and feed_forward.w2 tensors. The 2-bit representation is extremely compact but also far more likely to lose important information since it can only represent four different values per weight compared to 256 values in an 8-bit quantization.

This is the theory, our tool offers us three types of conversions: --outtype {f32,f16,q8_0}

- f32 (Float 32-bit): This is the standard high-precision representation for floating-point numbers in many computer calculations, including in LLMs. Each weight is stored with a 32-bit precision, offering great accuracy but also a large model size and resource use. This option is not a form of quantization but rather the standard format without quantization.

- f16 (Float 16-bit): This is a more compact version of f32, where each weight is stored with a 16-bit precision. This reduces the model size and the necessary computational amount, with a minor loss of precision compared to f32. Although it is a form of compression, it is not technically quantization as we discussed earlier.

- q8_0 (8-Bit Quantization): This refers directly to the 8-bit quantization method mentioned earlier. In this method, each weight is represented with 8 bits. It is the highest quantization among the given options and is almost comparable to f16 in terms of precision. It provides a good balance between reducing the model size and preserving precision.

In summary, f32 offers the greatest precision but at the largest size, f16 reduces this size by half with a slight loss of precision, and q8_0 further reduces the size while offering precision that is generally sufficient for many applications. These options reflect different trade-offs between precision and efficiency in terms of size and computation.

- f32: will give you a 27-gigabyte file. You'll have to ensure you have enough RAM for it. Indeed, Llama.cpp will load the entire file into RAM. Execution simply crashed on my machine.

- f16: will give you a 14-gigabyte file. Executing an instruction will result in this:

main -m /Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1.gguf -i --interactive-first -r "### Human:" --temp 0 -c 2048 -n -1 --ignore-eos --repeat_penalty 1.2 --instruct

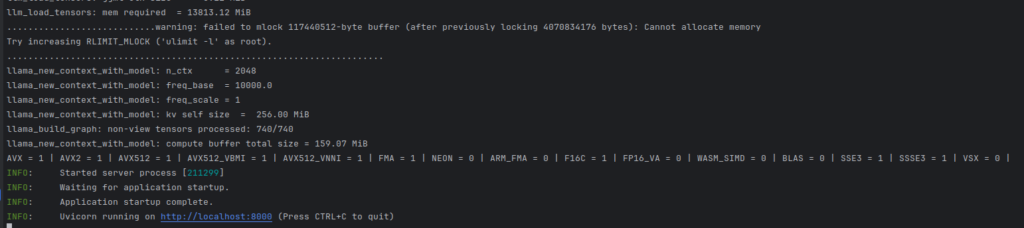

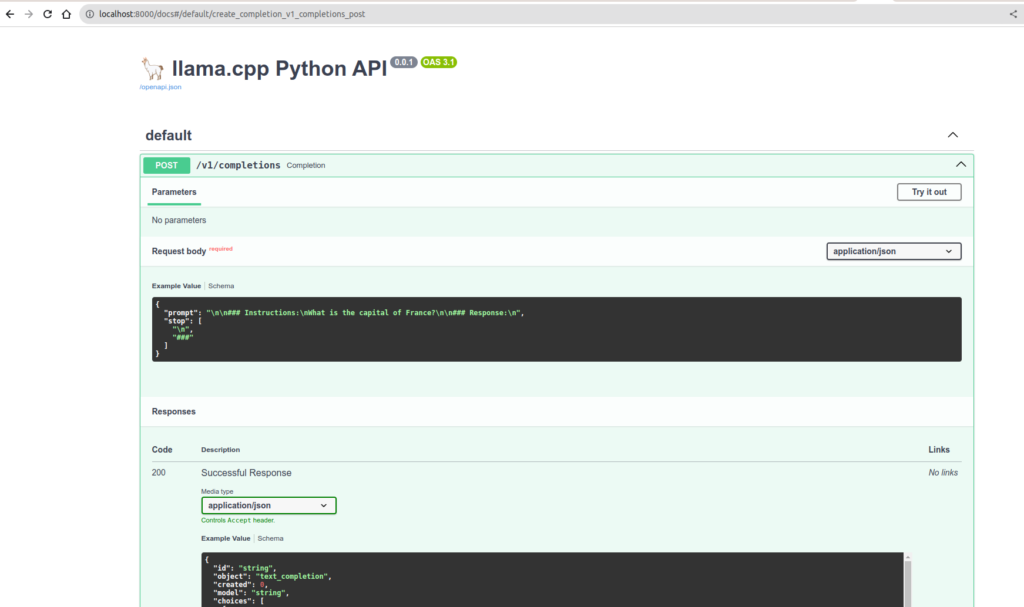

You have the option of directly using a server. The following command allows you to install llama-cpp and a server compatible with OpenAPI.

pip install llama-cpp-python[server]

python3 -m llama_cpp.server --model /Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1.gguf --host 0.0.0.0 --port 8000

We are now going to install a client interface that will converse with our API.

gh repo clone https://github.com/mckaywrigley/chatbot-ui.git

cd chatbot-ui/

docker compose up

I slightly modified the docker-compose to calibrate it with my configuration.

version: '3.6'

services:

chatgpt:

build: .

ports:

- 3000:3000

restart: on-failure

environment:

- 'OPENAI_API_KEY=sk-XXXXXXXXXXXXXXXXXXXX'

- 'OPENAI_API_HOST=http://host.docker.internal:8000'

- 'DEFAULT_MODEL=/Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1.gguf'

- 'NEXT_PUBLIC_DEFAULT_SYSTEM_PROMPT=You are a helpful and friendly AI assistant. Respond very concisely.'

- 'WAIT_HOSTS=http://host.docker.internal:8000/'

- 'WAIT_TIMEOUT=${WAIT_TIMEOUT:-3600}'

extra_hosts:

- "host.docker.internal:host-gateway"

The program is not really designed to interact directly with MistralAi. I might not have chosen the simplest example. Know that with the models specified as compatible on the official repository, the integration is better.

Here's a glimpse of my little question about the mayonnaise recipe via Chat-ui.

Now let's see what it looks like by pushing quantization to 8 bits with the type at q8_0.

python3 llama.cpp/convert.py /Data/Projets/Llm/Models/mistralai --outfile /Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1_q8.gguf --outtype q8_0

main -m /Data/Projets/Llm/Models/mistralai/Mistral-7B-v0.1_q8.gguf -i --interactive-first -r "### Human:" --temp 0 -c 2048 -n -1 --ignore-eos --repeat_penalty 1.2 --instruct

As you can see, it's much faster! And perfectly usable.

So concludes this little presentation of a self-hosted stack. As you can see, with very little means, it works. Imagine with hardware designed for it! Be aware that MistralAi is a French company. Apart from use on an inexpensive laptop, note that the entire suite of business tools is available for this model.